Google I/O 2025 – Full Executive Summary & Key Takeaways - EXC Summary

Learn the key announcements and themes from the Google I/O '25.

The core message is the rapid advancement and integration of Google's AI models, particularly Gemini, across a vast range of products and services. Google is emphasizing the speed of innovation, the development of powerful AI agents capable of complex tasks, and the increasing personalization and multimodality of AI interactions.

A clear highlight was the significant growth in developer adoption of Gemini, with the event showcasing new applications across real-time translation, search, creative tools (video and music generation), robotics, accessibility, and even disaster response.

A significant focus is placed on the future of AI, particularly the concept of "world models" and the development of Android XR for next-generation computing experiences.

Key Themes and Important Ideas/Facts

Rapid Pace of Gemini Development and Deployment

- Google is shipping models and breakthroughs "faster than ever," no longer waiting for I/O to unveil significant advancements.

- "We have announced over a dozen models and research breakthroughs and released over 20 major AI..."

- This "Gemini era" is characterized by the swift integration of their "most intelligent model" into products.

Massive Growth in Gemini Usage and Developer Adoption

- A dramatic increase in token processing: "processing 480 trillion monthly tokens. That's about a 50x increase in just a year."

- Significant growth in developers using the Gemini API: "over 7 million developers have built with the Gemini API... over 5x growth since last I/O."

- Gemini usage on Vertex AI is "up more than 40 times since last year."

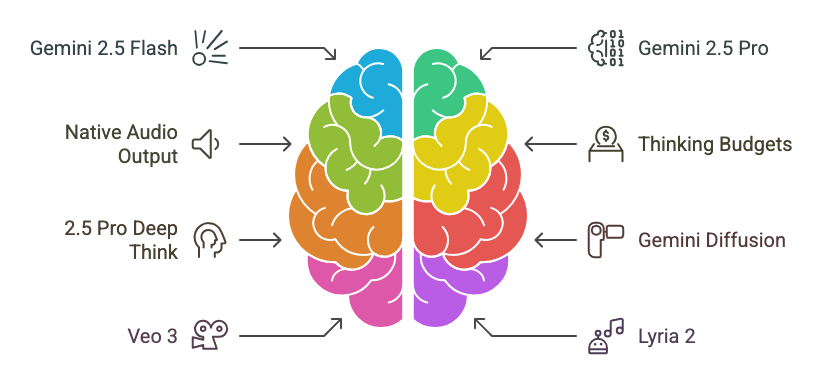

Expansion of Gemini Capabilities and Models

- Gemini 2.5 Flash and Pro: These are key models being rolled out, with Flash emphasizing efficiency and lower latency, and Pro excelling in reasoning, code, and long context. Flash is generally available in early June, with Pro following soon after.

- Native Audio Output: New text-to-speech capabilities with multi-speaker support (two voices initially) and the ability to seamlessly switch between languages while maintaining the same voice. Available in the Gemini API, with a Live API preview later.

- Thinking Budgets: A new feature rolling out to 2.5 Flash and Pro, allowing developers to control the tokens used for model "thinking" to balance cost and latency vs. quality.

- Gemini 2.5 Pro Deep Think: A new mode for 2.5 Pro offering enhanced thinking capabilities, currently in trusted tester preview for frontier safety evaluations.

- Gemini Diffusion: A model for image and video generation, with a new version generating "five times faster than even 2.0 Flash-Lite."

- Veo 3: Google's state-of-the-art video generation model, now available, featuring enhanced visual quality, stronger understanding of physics, and "Native Audio generation" for sound effects, background sounds, and dialogue.

- Lyria 2: Google's generative music model, capable of generating "high-fidelity music and professional grade audio," available for enterprises, YouTube creators, and musicians.

Gemma: Strategic Value as Google's Family of Open Models

Gemma represents Google's strategic commitment to open-source AI, providing a family of models designed for deep customization and deployment flexibility. Unlike Google's broader Gemini models, which are often consumed via APIs for general-purpose applications, Gemma's core purpose is to enable organizations and developers to fine-tune their own AI models for specific, often proprietary, and sensitive use cases. This is particularly valuable when the AI needs to understand an organization's unique data, learn the nuances of a specific business, or operate in environments requiring strict privacy or offline capabilities.

Key Strategic Advantages for Leaders:

- Unparalleled Customization and Control: Gemma models offer direct control and flexibility, allowing organizations to adapt the AI to their precise needs without relying solely on managed services. This is crucial for maintaining competitive advantage and handling sensitive information internally.

- Cost-Effective and Scalable On-Device AI:

- Gemma 3n, a more recent and optimized version, is capable of running on as little as two gigabytes of RAM. This makes it exceptionally lean and fast for mobile and edge hardware, sharing the same architecture as Gemini Nano, Google's multimodal on-device model.

- This capability enables organizations to deploy powerful AI features directly on user devices, reducing cloud compute costs and enhancing user privacy by processing data locally. It signifies a path to affordably scale AI features to a massive audience.

- Accelerated Innovation via the "Gemmaverse": The "Gemmaverse" is a testament to Gemma's open nature, encompassing tens of thousands of model variants, tools, and libraries created by a vibrant developer community. With over 150 million downloads and nearly 70,000 community-created variants, Gemma fosters rapid innovation and provides a rich ecosystem for developing specialized AI solutions.

- Broad Adaptability and Multilingual Leadership: The Gemma family is designed for high adaptability across various domains. It stands out for its multilingual support, available in over 140 languages, and is recognized as the "best multilingual open model on the planet". This global reach is a significant asset for international operations.

Transformative Impact Across Industries:

Gemma's adaptability has demonstrated incredible promise in various sectors, leading to highly specialized and impactful applications:

- Healthcare (MedGemma): This collection of open models is Google's most capable for multi-modal medical text and image understanding. It enables developers to build custom health applications, such as analyzing radiology images or summarizing patient information for physicians, offering a foundation for significant advancements in medical AI.

- Accessibility (SignGemma): SignGemma is a new family of models trained to translate sign language to spoken language texts, focusing on American Sign Language in English. It is touted as the most capable sign language understanding model ever, offering a groundbreaking tool for improving communication and accessibility for deaf and hard-of-hearing communities.

- Novel Research and Understanding (DolphinGemma): Pushing the boundaries beyond human language, DolphinGemma is the world's first large language model for Dolphins. Fine-tuned on decades of field research, it helps scientists understand dolphin communication patterns and can even generate new synthetic dolphin sounds. This demonstrates the versatility of Gemma for entirely new and unconventional AI applications, even running on devices like a Pixel 9.

Deployment Flexibility: Fine-tuned Gemma models can be deployed through Google Cloud services (Cloud Run, Vertex) or via Google AI Edge for local deployment, providing strategic options based on an organization's infrastructure and privacy requirements.

In essence, Gemma provides a powerful, open, and adaptable foundation for leaders seeking to implement custom, privacy-preserving, and scalable AI solutions that can significantly enhance operational efficiency, drive innovation, and address unique business challenges.

AI Agents and Automation

- Project Mariner: A research prototype agent that can "interact with the web and get stuff done." - Use natural language to assign AI agents to handle time-consuming tasks, like research, planning, and data entry. They can tackle tasks simultaneously in browsers running on virtual machines.

- Jules: An asynchronous coding agent now in public beta, capable of tackling complex tasks in large code bases, fixing bugs, and making updates, integrating with GitHub.

- The concept of a "universal AI assistant" that can "perform everyday tasks for us," "take care of mundane admin," and "surface delightful, new recommendations."

Personalization of AI

- Personalized Smart Replies: Smart Reply features that "sound like you," learning from your past communications and documents to generate replies matching your tone, style, and vocabulary. Available in Gmail this summer for subscribers.

- Personal Context in AI Mode (Search) and Gemini App: The ability for AI to connect with your personal Google information (with permission) such as search history, notes, emails, and documents to provide more relevant and tailored responses and suggestions. "making it an extension of you." This is coming to AI Mode this summer and is being expanded in the Gemini app.

Transformation of Google Search with AI

- AI Overviews: Already showing significant adoption ("over 1.5 billion users every month") and driving growth in queries, particularly visual searches via Google Lens (65% YoY growth). AI Overviews are now faster and powered by the latest Gemini models that can take the legwork out of searching from you.

- All New AI Mode: A "total reimagining of Search" with advanced reasoning, powered by Gemini 2.5. Rolling out for everyone in the US starting today. It uses a "query fanout technique" to break down complex questions and conduct multiple searches simultaneously.

- Deep Research Capabilities in AI Mode: Coming to AI Mode in labs, enabling users to "unpack a topic" and perform in-depth analysis from a single query.

- Agentic Checkout in Search: Search will soon help with tasks like booking event tickets, restaurant reservations, and appointments, acting as an agent to complete the purchase process.

- Multimodality in Search: Enhanced integration of visual search via Google Lens and bringing "Project Astra's live capabilities into AI Mode."

Advancements in Multimodality and Live Interaction

- Demonstrations of real-time speech translation directly in Google Meet (English and Spanish available for subscribers).

- Gemini's ability to understand images and provide contextual information or suggestions ("Gemini is pretty good at telling you when you're wrong" examples with misidentified objects). Rolling out to everyone on Android and iOS.

- Project Astra prototype showcasing a universal AI assistant that can see, hear, understand context, and perform actions based on visual and auditory input (e.g., bike repair scenario).

Future of AI: World Models and AGI

- Demis Hassabis discusses the ambition to extend Gemini to become a "world model" – a model that can "make plans and imagine new experiences by simulating aspects of the world, just like the brain does."

- This builds on previous work with AlphaGo and Genie 2 (generating 3D-simulated environments for future general agents).

- Understanding the physical environment is critical for robotics, leading to the development of Gemini Robotics.

- The ultimate goal is unlocking a "new kind of AI" that is "helpful in your everyday life, that's intelligent and understands the context you're in and that can plan and take action on your behalf."

Creative Tools Powered by AI

- Veo 3 (video generation with audio) and Lyria 2 (music generation) are highlighted as tools empowering creators.

- Collaboration with filmmakers like Darren Aronofsky to shape Veo's capabilities.

- Introduction of "Flow," an AI filmmaking tool combining Veo, Imagen, and Gemini, designed for creatives to fluidly express ideas and generate content.

Safety, Transparency, and Responsibility

- Mention of conducting "frontier safety evaluations" for new models like 2.5 Pro Deep Think.

- Improvements in security and transparency for developers building with Gemini 2.5, providing insights into model "thinking" and tool calls.

- Continued innovation in detecting AI-generated media with SynthID, which embeds invisible watermarks. Over 10 billion pieces of content have been watermarked, and a new detector can identify the watermark in various media types. Expanding partnerships to increase watermark adoption and detection.

Accessibility and Societal Impact

- Project Astra demonstrated as a tool with potential for accessibility, showcased by helping a visually impaired musician navigate backstage and identify objects and information.

- Google's work with partners on FireSat, a constellation of satellites using AI to detect wildfires and provide near real-time insights.

- Mention of Wing drone deliveries for relief efforts during Hurricane Helene.

Android XR and Future Form Factors

- Introduction of Android XR as the "first Android platform built in the Gemini era," supporting a spectrum of devices from headsets to lightweight glasses.

- Collaboration with Samsung (Project Moohan headset) and Qualcomm on Android XR.

- Gemini in Android XR understands context and intent in richer ways.

- Glasses with Android XR are designed for all-day wear, with cameras, microphones, speakers, and optional in-lens displays.

- Demonstration of Gemini in Android XR glasses helping with navigation, information retrieval (identifying a coffee shop), scheduling, and live translation (Hindi and Farsi demonstration, with English translation displayed).

- Partnership with eyewear companies Gentle Monster and Warby Parker to build stylish glasses with Android XR.

New Google AI Subscription Plans

- Introduction of Google AI Pro (available globally) offering a "full suite of AI products" and the latest models like Veo 3 and Lyria 2.

- Introduction of Google AI Ultra, including larger rate limits, access to 2.5 Pro Deep Think (when ready), Flow with Veo 3, YouTube Premium, and significant storage.

Notable Quotes

- "In our Gemini era, we are just as likely to ship our most intelligent model on a random Tuesday in March or a really cool breakthrough like AlphaEvolve just a week before. We want to get our best models into your hands and our products ASAP. And so we are shipping faster than ever." - Sundar Pichai

- "This time last year, we were processing 9.7 trillion tokens a month across our products and APIs. Now we are processing 480 trillion monthly tokens. That's about a 50x increase in just a year." - Sundar Pichai

- "Today, over 7 million developers have built with the Gemini API across both Google AI Studio and Vertex AI, over 5x growth since last I/O." - Sundar Pichai

- "You can see how well it matches the speaker's tone, patterns, and even their expressions. We are even closer to having a natural and free flowing conversation across languages." - Sundar Pichai (referencing real-time translation)

- "Now imagine if those responses could sound like you. That's the idea behind Personalized Smart Replies." - Sundar Pichai

- "We're living through a remarkable moment in history, where AI is making possible an amazing new future." - Demis Hassabis

- "We continue to double down on the breadth and depth of our fundamental research to invent the next big breakthroughs that are needed for artificial general intelligence." - Demis Hassabis

- "Gemini is already the best multimodal foundation model, but we're working hard to extend it to become what we call a world model." - Demis Hassabis

- "AI systems will need world models to operate effectively in the real world." - Demis Hassabis

- "As people use AI Overviews, we see they are happier with their results, and they search more often. In our biggest markets, like the US and India, AI Overviews are driving over 10% growth in the types of queries that show them." - Sundar Pichai

- "AI Mode is Search transformed, with Gemini 2.5 at its core. It's our most powerful AI search, able to tackle any question." - Liz Reid

- "It looks like someone is ready to get their hands dirty and plant a green bean seedling." - Project Astra prototype response in multimodal interaction demo.

- "Our goal is to make Gemini the most personal, proactive, and powerful AI assistant." - Josh Woodward

- "This technology is the most state of the art in the industry at scale. And it allows us to visualize how billions of apparel products look on a wide variety of people." - Vidhya Srinivasan (referencing visual shopping)

- "Today, I'm excited to announce our new state of the art model, Veo 3." - Josh Woodward

- "That means that Veo 3 can generate sound effects, background sounds, and dialogue. Now you prompt it and your characters can speak." - Josh Woodward

- "This isn't just another tool... It's a game changer... This is just like, mind blowing to me. The potential is almost limitless." - AI Filmmaker quote about Flow

- "It's the first Android platform built in the Gemini era, and it supports a broad spectrum of devices for different use cases, from headsets to glasses and everything in between." - Shahram Izadi (referencing Android XR)

- "With Google Maps in XR, you can teleport anywhere in the world simply by asking Gemini to take you there." - Shahram Izadi

- "They're my personal teleprompter, and I have prescription lenses. So I can see you all." - Shahram Izadi (referencing the Android XR glasses prototype)

- "We want you to be able to wear glasses that match your personal taste." - Shahram Izadi (referencing partnerships with eyewear companies for Android XR glasses)

- "The opportunity with AI is truly as big as it gets." - Sundar Pichai